Tetrate Agent Router Service

The Tetrate Agent Router Service provides a unified Gateway for accessing various AI models with fast inference capabilities.

This gateway acts as an intelligent router that can distribute requests across multiple model providers, offering enterprise-grade reliability and performance optimization.

Want to get started quickly? Sign up for the Tetrate Agent Router Service to get an API key, then use Tetrate on Continue Mission Control to get started fast.

Setup

1

Sign in or sign up

Visit the Agent Router Service portal and create an account to get your API key

2

Get your API key

Go to the API keys page to get your key

3

Configure Continue

- Choose a configuration method below.

- If you use the Continue VS Code extension, install version

>=1.2.3.

Quickstart with Continue Mission Control

Fastest way: use preconfigured models from Tetrate on Continue Mission Control

1

Browse models

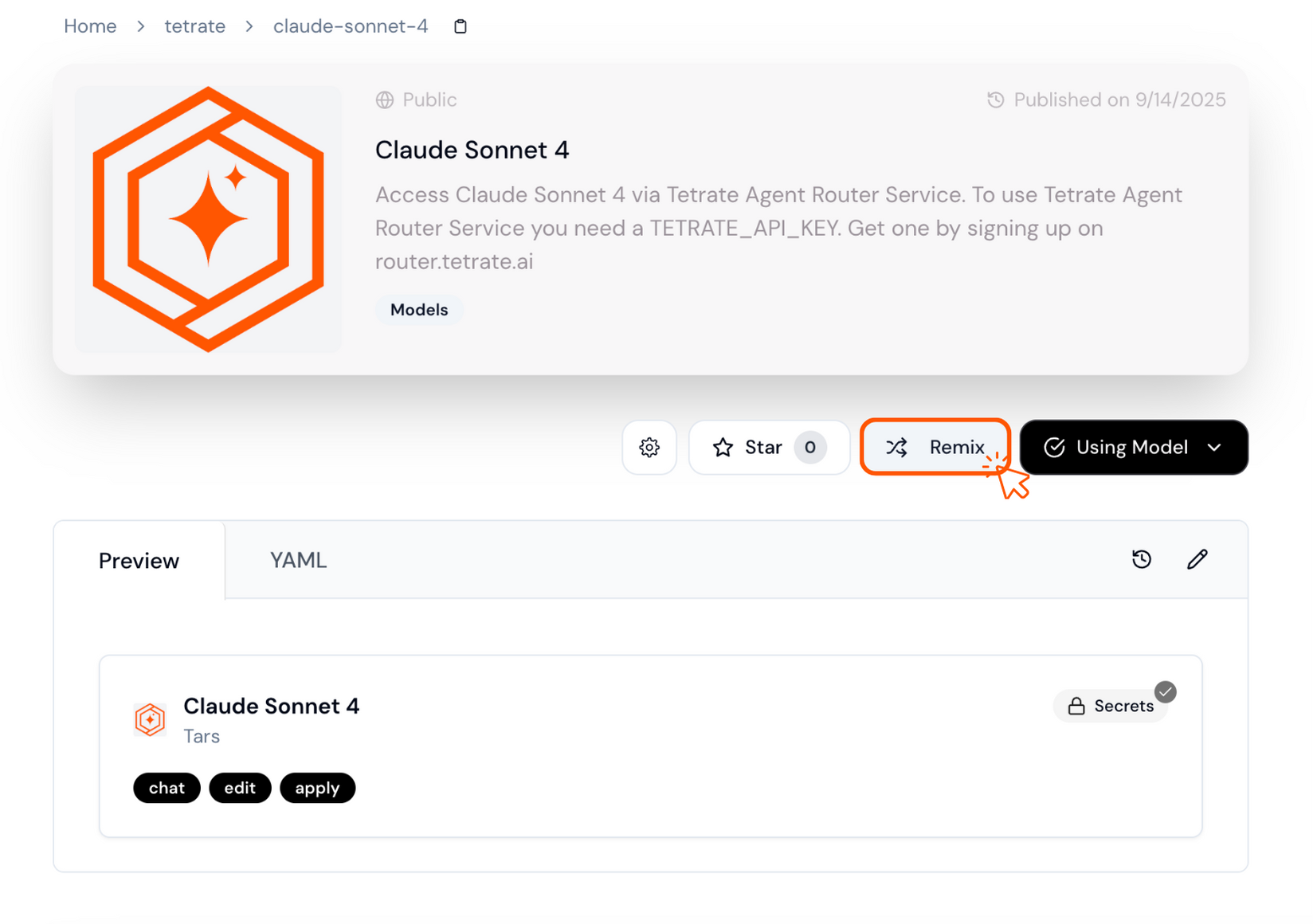

Open Tetrate on Continue Mission Control and pick a model (e.g., Claude Sonnet 4)

2

Use or remix

Click "Use Model", or "Remix" to change the model ID if needed

3

Add your API key

Add your API key to Continue. See adding secrets in Continue Mission Control and managing local secrets in the FAQ

Add it as a Continue Mission Control secret named

TETRATE_API_KEY to reuse across projects.

If a model is missing, remix a similar one and set the

model field to the target ID.Configuration Methods

In Local Agent Configuration

Use a Tetrate model block from the Hub or define it directly in your local agent configuration.

Continue Mission Control

Use a Tetrate model block from the Hub or define your own on the Hub.

Using Local Model Blocks

Create a local model block for reuse across agents without publishing it to the Hub.

Configuration Examples

Click a tab to see an example.

When to use: Simple local setups (like using the VS Code extension) when you don't need shared or published blocks.

Use a Tetrate model block from the Hub in your local agent configuration:

name: Local Agent

version: 1.0.0

schema: v1

models:

- uses: tetrate/claude-sonnet-4

with:

TETRATE_API_KEY: ${{ secrets.TETRATE_API_KEY }}

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

Or define the model directly:

name: Local Agent

version: 1.0.0

schema: v1

models:

- name: Claude Sonnet 4

provider: tars

model: claude-4-sonnet-20250514

apiKey: ${{ secrets.TETRATE_API_KEY }}

roles:

- chat

- edit

- apply

capabilities:

- tool_use

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

Troubleshooting Common Issues

Invalid API key

Verify your API key is active and has no extra spaces.

Model not found

Confirm the model ID matches the Tetrate catalog.

Slow responses

Check your network or try a less-loaded model. Contact Tetrate support if issues persist.

Configuration not loading

Validate YAML syntax and review error messages in Continue. If using Hub, ensure the block is published.

Join the Community

Connect with Tetrate and other builders for help and discussion.